Voice AI Integration's Common Roadblocks and Practical Fixes.

Voice AI can reshape customer experiences and streamline operations, but the path to reliable deployment is full of technical and operational bumps. This article breaks down the real challenges organizations face when adding voice AI: speech recognition accuracy, contextual understanding, system integration, and data privacy. We’ll explain why these issues matter and how to tackle them so voice automation delivers measurable value.

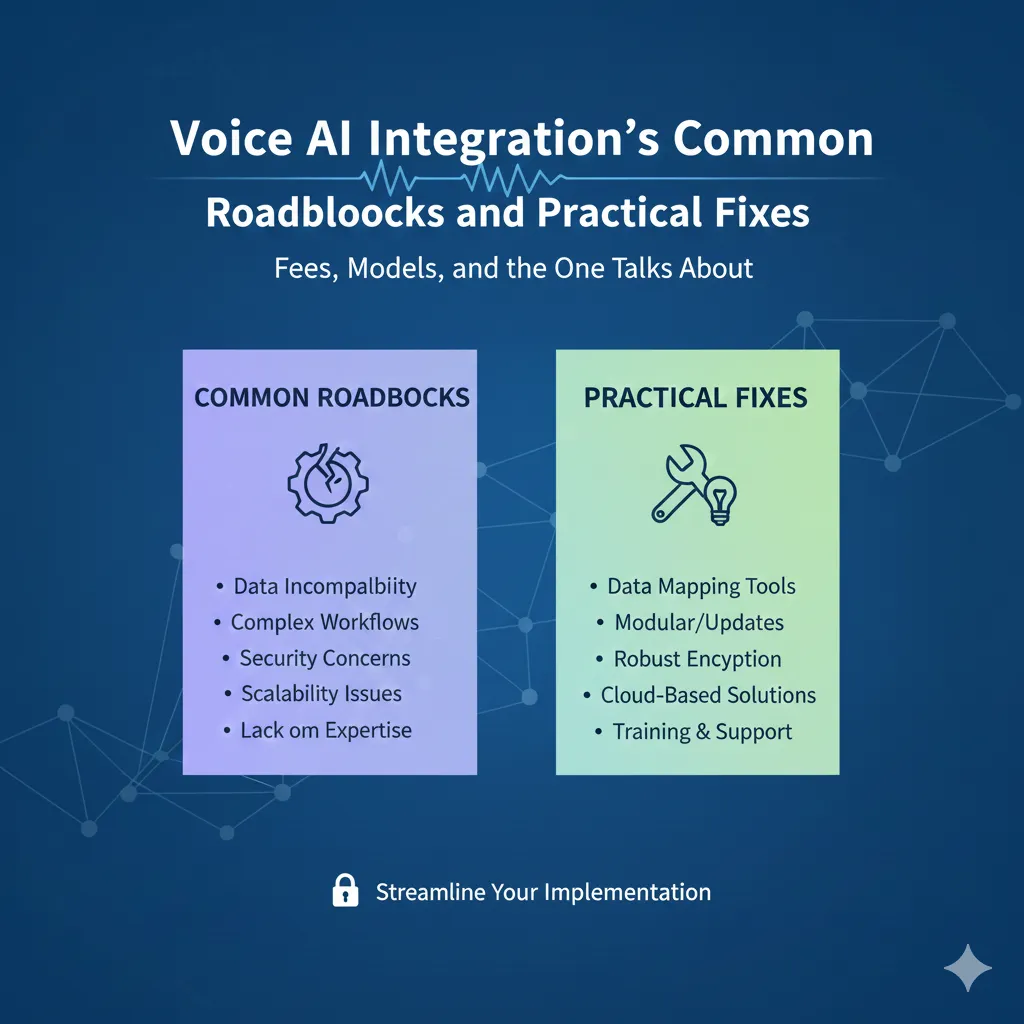

Integrating voice AI isn’t just a technical exercise. From noisy environments that confuse models to brittle connections with legacy systems, a number of gaps can undermine performance. Here we outline the main integration hurdles, practical strategies for preserving context in conversation, and approaches to tie voice AI into your existing stack. We’ll also show how The Power Labs’ solutions help teams navigate these issues faster and with less risk.

What Are the Main Challenges of Voice AI Integration?

Voice AI projects often stumble on predictable problems that reduce accuracy and user trust. Recognizing these pain points up front helps teams prioritize work that improves reliability and adoption.

How Do Speech Recognition Accuracy Issues Impact Voice AI Performance?

Speech recognition accuracy is the foundation of any voice experience. Misheard words or phrases cause failed tasks and frustrate users, especially when models haven't been trained on diverse accents, dialects, or domain-specific terms. Accuracy drops further in noisy settings or when audio quality is poor. Improving results requires robust models, diverse training data, continuous tuning, and noise-robust audio processing plus monitoring to catch regressions in the field.

What Role Does Background Noise Play in Voice AI Accuracy?

Ambient noise is one of the most common real-world factors that reduces transcription quality. Cafés, call centers, or streets introduce competing sounds that mask speech or distort intent. Effective mitigation uses on-device preprocessing, adaptive noise suppression, and beamforming at the microphone level, combined with model-level techniques that are trained to handle realistic audio conditions. Together, these steps make voice interfaces more dependable in everyday environments.

How Can Contextual Understanding Be Ensured in Voice AI Conversations?

Context is what turns a one-off command into a useful conversation. Without it, interactions feel mechanical and often fail to resolve user needs.

What Are the Limitations of Natural Language Processing in Voice AI?

NLP drives understanding, but it still struggles with idioms, ambiguous phrasing, and rapidly changing domain language. Out-of-domain queries or niche terminology can break comprehension. Addressing these limits means combining strong base models with domain-specific fine-tuning, intent disambiguation, and continual learning from real user interactions to better capture intent and nuance.

How Does Multi-turn Dialogue Enhance Voice Bot Interactions?

Multi-turn dialogue keeps conversational context across exchanges so users don't have to repeat themselves. It enables follow-up questions, slot-filling, and logical flows that feel natural. Implementing multi-turn systems involves session management, context windows, and memory strategies that balance short-term context with privacy and performance constraints, all of which improve task completion and user satisfaction.

What Are the Key Hurdles in Integrating Voice AI with Existing Business Systems?

Voice AI only delivers value if it connects cleanly to the systems users rely on. Integration gaps translate to slow responses, inconsistent data, and frustrated customers.

How Does System Integration Affect Voice Bot Efficiency?

Poor integration creates latency and brittle behavior. Voice systems must exchange data with CRMs, ticketing tools, and backend services in real time to fulfill requests. Reliable integration relies on well-defined APIs, middleware that handles retries and transformations, and clear SLAs for downstream services so the voice bot can respond quickly and accurately.

What Strategies Facilitate Seamless Voice AI and CRM Integration?

Successful CRM integration uses secure APIs, role-based access, and event-driven patterns (webhooks, message queues) so the voice layer can read and write customer context without delays. Mapping voice intents to CRM objects, adding simple personalization tokens, and ensuring audit trails for actions are practical steps that deliver immediate, measurable improvements in both service speed and personalization.

How Are Data Privacy and Security Challenges Addressed in Voice AI Solutions?

Trust is non-negotiable. Voice systems often handle sensitive personal or transactional data, so protecting that data is essential to adoption.

What Are the Data Privacy Concerns with Voice AI?

Users worry about how voice recordings and transcriptions are stored and used. Key concerns include unintended recording, long-term retention, and secondary uses of data. Addressing them requires explicit consent flows, strict retention policies, encryption in transit and at rest, anonymization where possible, and clear privacy notices that explain data practices in plain language.

How Do Responsible AI Principles Mitigate Security Risks?

Responsible AI frameworks reduce risk by embedding transparency, accountability, and governance into development and operations. That means access controls, logging, model explain ability where appropriate, bias testing, and regulatory compliance (GDPR, CCPA). Regular security audits and incident-response plans round out a program that protects users and the business.

What User-Centric Design and Adoption Strategies Improve Voice AI Acceptance?

Technology succeeds when people find it helpful and easy to use. Thoughtful design and onboarding accelerate adoption and reduce abandonment.

How Does UX Design Influence Voice Bot User Reluctance?

UX design determines whether users perceive voice as frictionless or frustrating. Clear prompts, graceful fallbacks for misunderstanding, and predictable behavior reduce hesitation. Prioritizing simplicity, contextual hints, and immediate feedback makes first-time experiences feel safe and useful, which drives repeat use.

What Onboarding Practices Enhance Voice AI Adoption?

Good onboarding sets expectations and teaches value quickly. Short guided tours, example phrases, and easy access to help reduce confusion. Collecting early feedback and iterating on the onboarding flow helps teams remove blockers and surface missing capabilities that matter most to users.

How Does The Power Labs’ AI Voice Bot Overcome Voice AI Integration Challenges?

The Power Labs builds voice systems with integration, privacy, and real-world robustness in mind. Our approach combines production-ready models, engineered audio pipelines, and integration tooling so teams move from pilot to scale with minimal disruption.

What Features of The Power Labs’ Four-Bot System Address Voice AI Difficulties?

Our Four-Bot System tackles common failure points: enhanced speech models for better recognition, context-aware components that preserve conversation state, and flexible connectors that link voice to your backend systems. Together these features reduce errors, improve task completion rates, and make voice interactions feel natural.

How Does Responsible AI Framework Ensure Ethical Voice Bot Deployment?

We apply a Responsible AI Framework across development and deployment: clear data handling policies, privacy-first defaults, auditing, and compliance checks. This ensures voice bots behave ethically, protect user data, and meet regulatory requirements so organizations can scale with confidence.

Feature: Advanced Speech Recognition

Description: Model ensembles and domain tuning to improve transcription accuracy

Benefit: Fewer misinterpretations and smoother user journeys

Feature: Contextual Understanding

Description: Session-aware dialogue management that keeps track of intent and slots

Benefit: More natural, goal-oriented conversations

Feature: Seamless Integration

Description: Prebuilt APIs and connectors for common CRMs and backend systems

Benefit: Faster time-to-value and reliable data flow

The Power Labs’ AI Voice Bot demonstrates how targeted engineering and product design remove the common barriers to voice AI success thus giving businesses the tools to deliver consistent, secure, and useful voice experiences.

Frequently Asked Questions

What are the benefits of using voice AI in business?

Voice AI increases accessibility, speeds up common tasks, and enables hands-free interactions that customers and employees value. It can boost engagement, reduce handling time, and surface behavioral insights from conversational data all of which support better service and operational efficiency.

How can businesses measure the success of their voice AI integration?

Track KPIs like intent recognition accuracy, task completion rate, average handling time, and customer satisfaction (CSAT). Combine quantitative metrics with qualitative feedback to identify gaps and prioritize improvements. Regular A/B testing and usage analytics help prove ROI and guide iteration.

What industries can benefit the most from voice AI technology?

Voice AI helps any industry that relies on frequent customer interaction or hands-free workflows: retail, healthcare, finance, utilities, and customer support are strong candidates. Use cases range from voice self-service and appointment booking to in-vehicle assistants and field operations.

What are the future trends in voice AI technology?

Expect deeper personalization, better multi-turn contextual understanding, and tighter integrations with IoT and AR experiences. Advances in on-device inference and privacy-preserving techniques will also expand where and how voice can be deployed safely.

How can businesses ensure compliance with regulations when using voice AI?

Adopt clear consent and data-retention policies, encrypt audio and transcripts, and document processing activities. Work with legal and compliance teams to align on GDPR, CCPA, and local rules, and perform regular audits to verify practices remain compliant.

What role does user feedback play in improving voice AI systems?

User feedback is essential. It reveals where the model misunderstands intent, highlights missing vocabulary, and surfaces UX friction. Routinely collecting and acting on feedback ensures the system evolves to meet real user needs and improves long-term performance.

Conclusion

Deploying voice AI successfully means solving accuracy, context, integration, and privacy challenges in parallel. With the right engineering practices and user-centered design, businesses can unlock voice-driven efficiency and engagement. The Power Labs’ tools and frameworks are built to accelerate that journey helping teams move from promising pilots to reliable, scalable voice experiences.